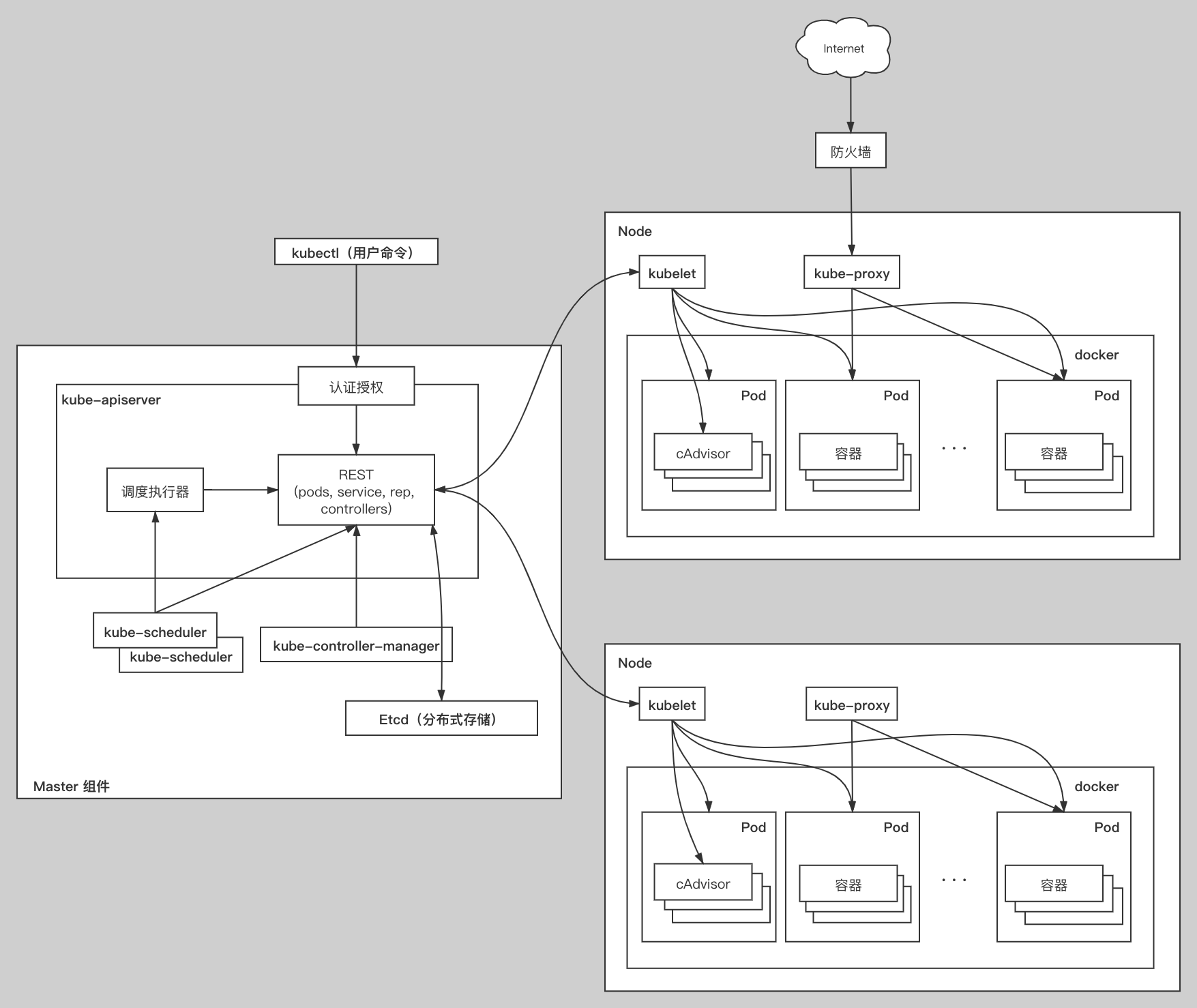

- 应用部署在哪里?

- 应用在哪里运行?

- 应用应如何访问?

- 问题该如何排查?

- 应用该如何监控?

pod

Pod 是共享资源的进程的逻辑管理单元.

底层还是依赖于linux的namespace和cgroup技术.

- 网络资源共享: pod第一个创建infra容器, 作为网络共享容器,

--net==container方式, 实现namespace网络共享.

- 存储资源共享: pod顶层声明一个volume, 需要共享的container挂载这个pod级别的volume, 实现存储资源共享.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

# host path: sidecar模式 较常用容器日志收集

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

volumes: # pod级别 顶层声明volume

- name: varlog

hostPath: # 采用hostpath方式

path: /root/log/counter # volume在host主机上的存储位置(没有会自动创建) 目录

containers:

- name: count

image: busybox

args:

- /bin/sh

- -c

- >

i=0;

while true;

do

echo "$i: $(date)" >> /var/log/1.log;

i=$((i+1));

sleep 1;

done

volumeMounts: # pod管理的container声明挂载pod顶层声明的volume

- name: varlog # hostpath 中pods声明的volume的名称

mountPath: /var/log # 当前container中需要挂载映射的目录

- name: count-log

image: busybox

args: [/bin/sh, -c, 'tail -n+1 -f /var/log/1.log']

volumeMounts:

- name: varlog

mountPath: /var/log

|

Replication Controller (RC 复制控制器)

Replica Set (RS 复制集)

Replication Controller保证多个节点的多个pod按照期望数量运行, 协调创建,删除和更新pod.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-rc

# rc 没有标签选择器

spec:

replicas: 3

selector:

app: nginx

template:

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

|

Deployment [无状态应用管理]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # 告知 Deployment 运行 2 个与该模板匹配的 Pod

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

|

Yaml文件

云平台的服务配置和管理

控制声明 + 被控对象声明

- 缩进的空格数目不重要,只要相同层级的元素左侧对齐即可

- - - 为可选的分隔符 ,当需要在一个文件中定义多个结构的时候需要使用

- Maps

1

2

3

4

5

6

7

8

|

# 键值对

apiVersion: apps/v1

kind: pod

# map 组(多层map嵌套, 同一缩进, 属于同一key下的map)

metadata:

name: demo

labels:

app: nginx

|

- List

1

2

3

4

5

6

7

8

9

10

11

|

containers:

- name: front-end

image: nginx

ports:

- containerPort: 80

- name: flaskapp-demo

image: jcdemo/flaskapp

ports: 8080

# 转义

# containers = [{name: front-end, image:nginx, ports: [{containerPort:80}]},

# {name:flaskapp-demo, image:cdemo/flaskapp, ports: 8080}]

|

| filed |

desc |

| apiVersion |

API版本 |

| kind |

资源类型 |

| metadata |

资源元数据 |

| spec |

资源规格 |

| replicas |

副本数量 |

| selector |

标签选择器 |

| template |

Pod模板 |

| metadata |

Pod元数据 |

| spec |

Pod规格 |

| containers |

容器配置 |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

# kubectl api-versions

admissionregistration.k8s.io/v1

apiextensions.k8s.io/v1

apiregistration.k8s.io/v1

apps/v1 # 包含一些通用的应用层的api组合,如:Deployments, RollingUpdates, and ReplicaSets

authentication.k8s.io/v1

authorization.k8s.io/v1

autoscaling/v1 # 允许根据不同的资源使用指标自动调整容器

autoscaling/v2

batch/v1 # 包含与批处理和类似作业的任务相关的对象,如:job、cronjob

certificates.k8s.io/v1

coordination.k8s.io/v1

crd.projectcalico.org/v1

discovery.k8s.io/v1

events.k8s.io/v1

flowcontrol.apiserver.k8s.io/v1beta2

flowcontrol.apiserver.k8s.io/v1beta3

networking.k8s.io/v1 # 用于Ingress

node.k8s.io/v1

policy/v1

rbac.authorization.k8s.io/v1 # 用于RBAC

scheduling.k8s.io/v1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1 # Kubernetes API的稳定版本,包含很多核心对象:pod、service等

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

apiVersion: v1 #必选,版本号,例如v1

kind: Pod #必选,Pod

metadata: #必选,元数据

name: nginx #必选,Pod名称

labels: #自定义标签

app: nginx #自定义标签名字

spec: #必选,Pod中容器的详细定义

containers: #必选,Pod中容器列表,一个pod里会有多个容器

- name: nginx #必选,容器名称

image: nginx #必选,容器的镜像名称

imagePullPolicy: IfNotPresent # [Always | Never | IfNotPresent] #获取镜像的策略 Alawys表示下载镜像 IfnotPresent表示优先使用本地镜像,否则下载镜像,Nerver表示仅使用本地镜像

ports: #需要暴露的端口库号列表

- containerPort: 80 #容器需要监听的端口号

restartPolicy: Always # [Always | Never | OnFailure]#Pod的重启策略,Always表示一旦不管以何种方式终止运行,kubelet都将重启,OnFailure表示只有Pod以非0退出码退出才重启,Nerver表示不再重启该Pod

---

|

持久化存储状态(volume和Persistent Volume)

volume, pv, pvc, StoreClass

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

---

# volume empty_dir k8s 1.26

apiVersion: v1

kind: Pod

metadata:

name: producer-consumer

labels:

name: producer-consumer

spec:

containers:

- name: producer

image: busybox

volumeMounts:

- mountPath: /producer_dir

name: shared-volume

args:

- /bin/sh

- -c

- echo "hello world" > /producer_dir/hello; sleep 30000

- name: consumer

image: busybox

volumeMounts:

- mountPath: /consumer_dir

name: shared-volume

args:

- /bin/sh

- -c

- cat /consumer_dir/hello; sleep 30000

volumes:

- name: shared-volume

emptyDir: {}

|

PV: 持久化数据卷. 持久化在宿主机上的目录.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# pv创建 k8s1.26

apiVersion: v1

kind: PersistentVolume # pv资源属性名称

metadata:

name: nfs-pv

spec:

storageClassName: nfs-scn # storageCalss注册

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

nfs: # pv 类型

server: 12.345.1.1

path: "/"

|

PVC: Pod期望使用的持久化存储的描述(要求), 如大小,读写权限等

1

2

3

4

5

6

7

8

9

10

11

12

13

|

---

# pvc绑定pv k8s1.26

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs-scn # pvc要和pv同属同一个storageClass

resources:

requests:

storage: 1Gi # pvc申请存储大小绑定的pv需要满足

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

apiVersion: v1

kind: Pod

metadata:

labels:

role: web-fronted

sepc:

containers:

- name: web

image: nginx:1.7.9

ports:

- name: web

containerPort: 80 # pod中container的port

volumeMounts:

- name: nfs

mountPath: "/user/share/nginx/html" # container web 自身映射出去的文件

volumes:

- name: nfs

persistentVolumeClaim: # 映射pvc的挂载

claimName: nfs-pvc # pod创建后, kubelet根据pvc绑定的pv,挂载这个容器指定的内部目录上

|

pvc 是pv 的volume的接口, 只负责pod绑定pv, 而真正处理存储持久化的是pv.

pod 是脆弱的, 一旦有一个申明不对或者不存在,不匹配, 宁可失败也不要启动,做一些无法控制的事情.

Volume Controller->PersistentVolumeController

PersistentVolumeController 负责用户提交的pvc, 只要出现合适pv, 迅速完成绑定.

storageClass看作是创建pv的模板

- 声明PV的要求, 如类型,大小等

- 创建PV需要用到的存储插件, 如Ceph等

1

2

3

4

5

6

7

|

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: block-service

provisioner: kubernetes.io/gec-pd # GCE PD插件

parameters: # PV 参数

type: pd-ssd # SSD格式化的GCE远程磁盘

|

网络

网络栈[请求和响应]

- 网卡 Network Interface

- 回环设备 Loopback Device

- 路由表 Routing Table

- iptables 规则

Docker会在宿主机上创建docker0的网桥, 连接到docker0的网桥上容器, 都可通过docker0网桥进行通信.

Veth Pair通过Veth Peer实现不同Network Namespace进行通信.

,被限制在 Network Namespace 里的容器进程,实际上是通过 Veth Pair 设备 + 宿主机网桥的方式,实现了跟同其他容器的数据交换。

- [flannel]

- [calico]

- [ingress]

代理不同后端Service的负载均衡服务, 即Ingress

Service中的Service

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

---

# Ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: cafe-ingress

spec:

tls:

- hosts:

- cafe.example.com

secretName: cafe-secret

rules: # IngressRule

- host: cafe.example.com # 必须是标准格式的域名地址 不能是IP地址

http:

paths:

- path: /tea # relative rouoter for service

backend:

serviceNmae: tea-svc # 指定后端service

servicePort: 80 # service 端口

- path: /coffee

backend:

serviceName: coffee-svc

servicePort: 80

|

Ingress上述对象, 本质就是Kubernetes项目的反向代理的抽象. 可以对比nginx实现细节. 该对象有Ingress Controller实现和控制.

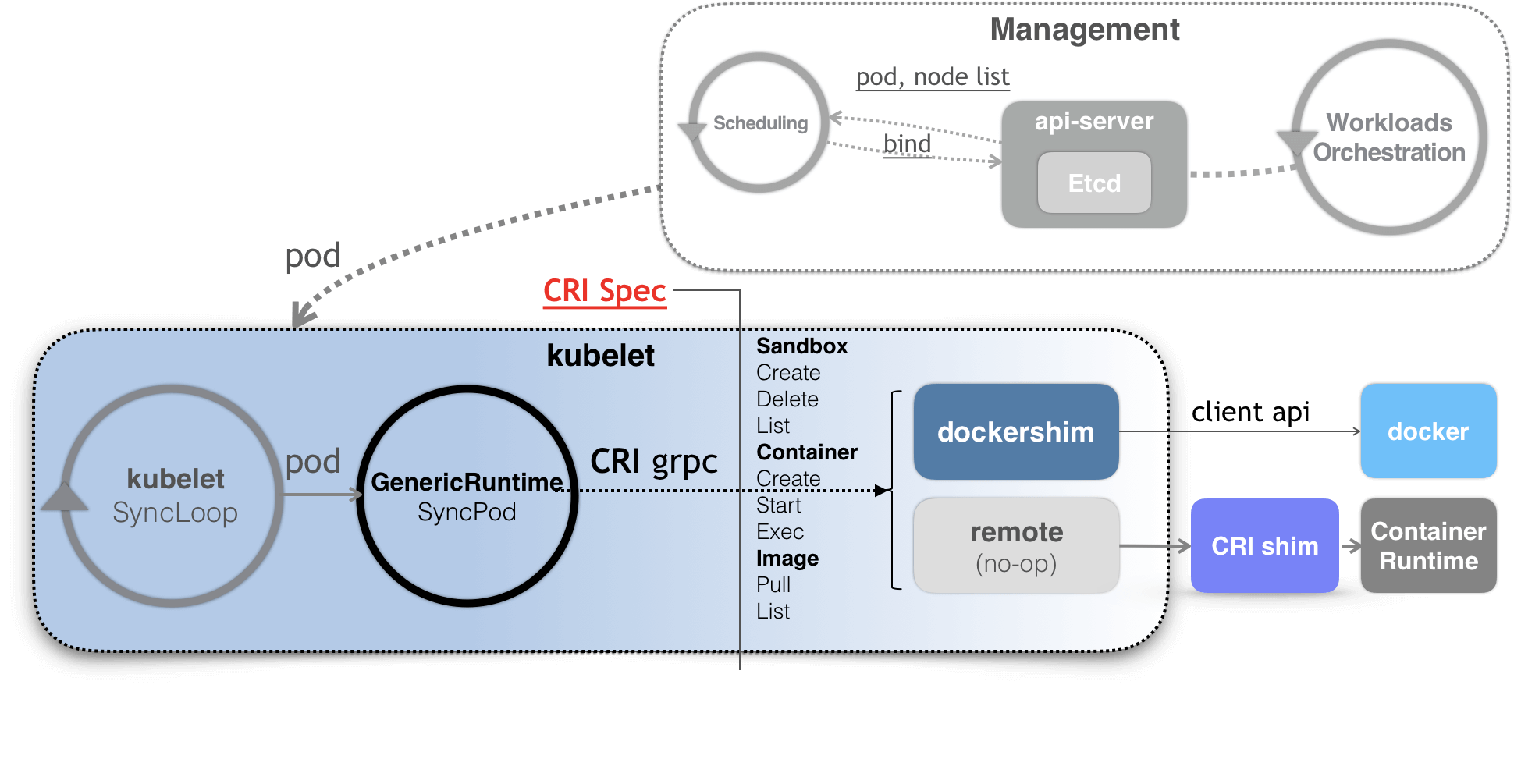

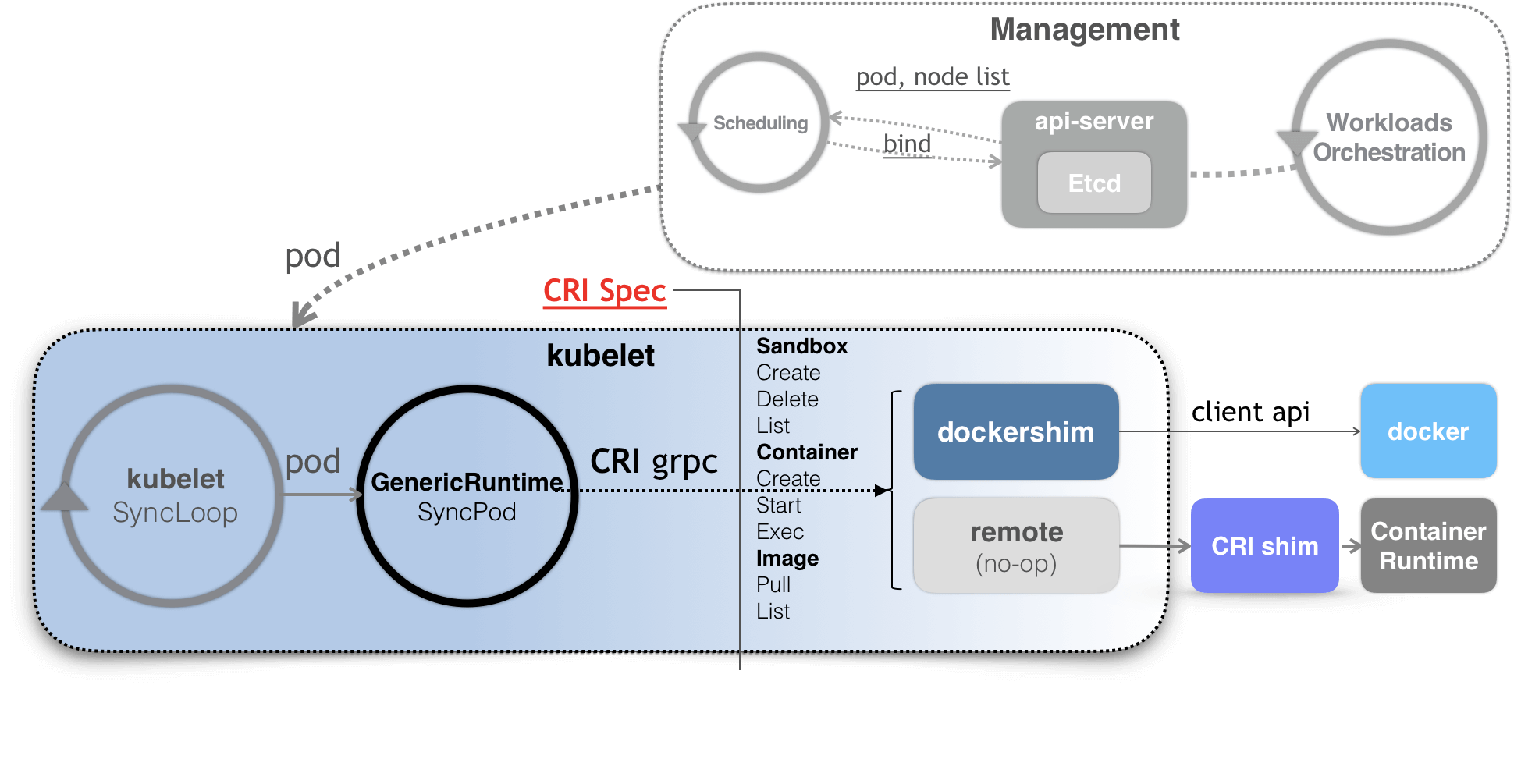

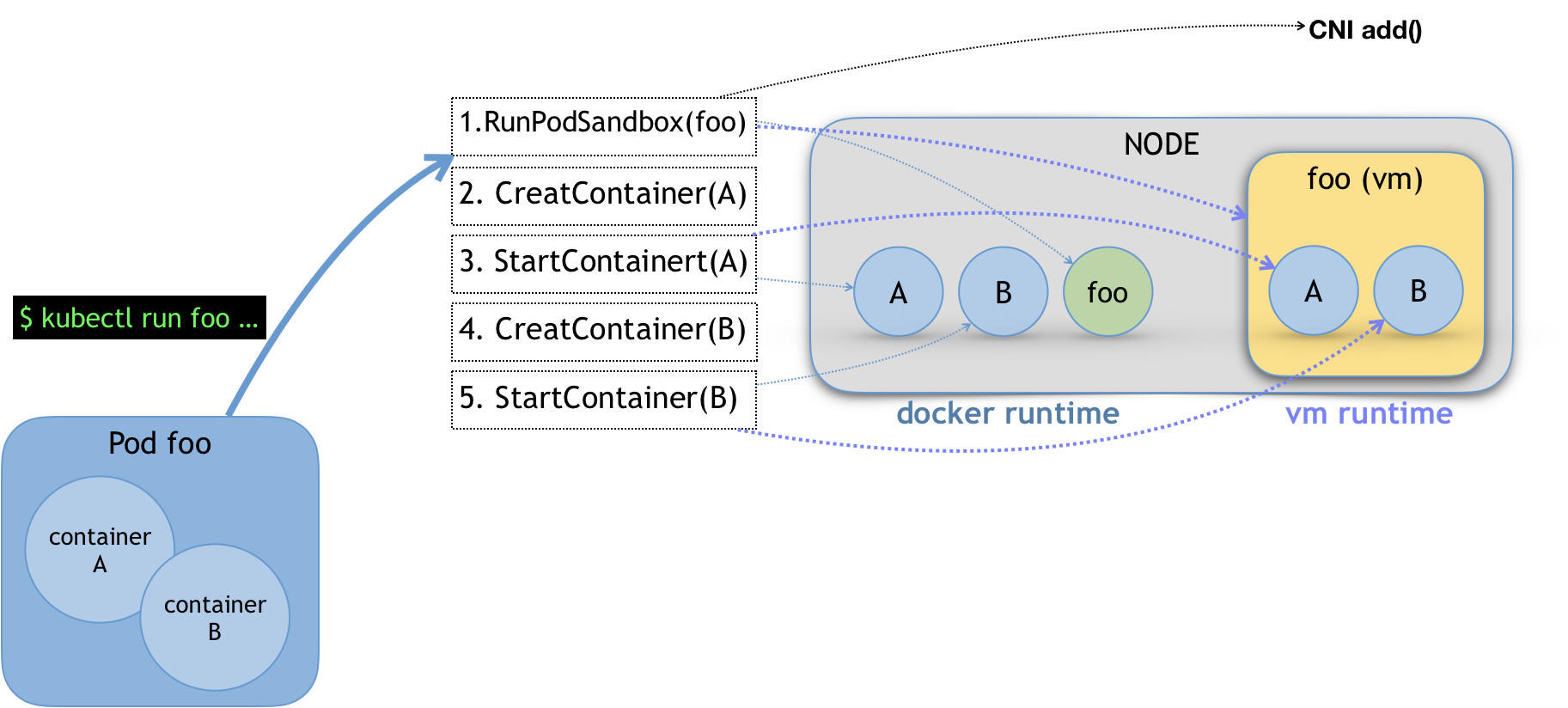

[runtimeCRI]

CRI Work Flow

kubelet

SIG-Node和kubelet编排系统跟容器交互的核心场所.

kubelet的核心工作如下:

- Pod 更新事件

- Pod 生命周期变化

- kubelet 本身设置的执行周期

- 定时的清理事件

CRIshim 完成kubelet和容器间交互操作.

“Dockershim removed from Kubernetes

As of Kubernetes 1.24, Dockershim is no longer included in Kubernetes. ”

CRI功能两组:

RuntimeService, 主要跟容器相关. 如创建,启动容器等ImageService, 主要和容器镜像相关. 如拉取,删除镜像等

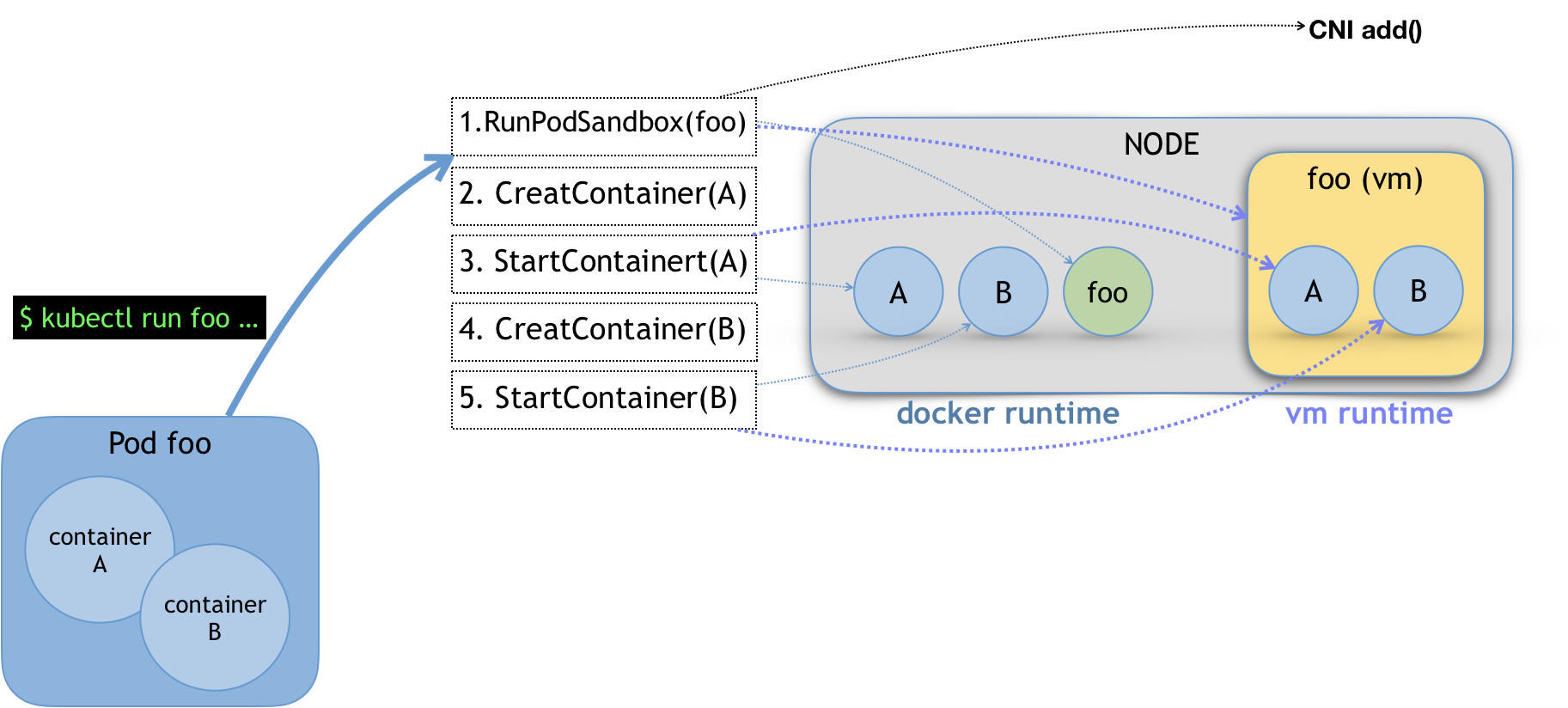

Pod是编排概念, 而不是运行概念.

kubectl run…

监控和日志

以Prometheus为核心的监控和日志系统.

二进制安装靠可用k8s集群

service 网段 10.96.0.0/16

node 网段 192.168.163.0

pod 网段 172.16.0.0/12

vm12 开启三个2核4G的虚拟机

所有虚拟机节点配置网络

1

2

3

|

# vi /etc/sysconfig/network

#>> NETWORKING=yes

#>> HOSTNAME=master

|

配置ens, 请查看虚拟机网关和分别设置主节点和work节点的ens(32或者33)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

# vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static # 修改BOOTPROTO=dchp改成static, 静态IP

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

# UUID=XXXX-XXXX-XXXX # 删除

ONBOOT=yes # 修改ONBOOT=no 开机自启

IPADDR=192.168.163.100 # k8s-master IP地址

# IPADDR=192.168.163.101 # k8s-node1 IP地址

# IPADDR=192.168.163.101 # k8s-node2 IP地址

NETMASK=255.255.255.0 # 增加

GATEWAY=192.168.163.2 # 增加 NAT分配的子网地址可在编辑→虚拟网络编辑器中查看

NAME=ens33

DEVICE=ens33

DNS1=192.168.163.2 # 增加

DNS2=114.114.114.114 # 增加

|

配置hosts

1

2

3

4

5

6

7

|

# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.163.100 k8s-master

192.168.163.101 k8s-node1

192.168.163.102 k8s-node2

|

重启

系统配置

关闭防火墙

1

2

|

# service iptables stop

# systemctl disable iptables

|

禁用selinux

1

2

3

4

5

|

# 查看selinux

# getenforce

# 关闭selinux

# vi /etc/selinux/config

#>> SELINUX=disabled

|

禁用防火墙

1

2

|

# systemctl stop firewalld

# systemctl disable firewalld

|

关闭swap

1

2

3

4

|

# swapoff -a

# sed -ie '/swap/ s/^/# /' /etc/fstab

# 查看关闭情况

# free -m

|

配置yum源

1

2

3

4

|

# mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

# yum makecache

# yum intsall -y vim

|

同步时间

1

2

3

4

5

|

# yum install ntp

# 开启服务

# service ntpd start

# 开启自启

# systemctl enable ntpd

|

k8s-master配置登录密钥(只在k8s中或多个管理节点)

1

2

3

4

5

6

7

8

9

10

11

|

# vim /etc/ssh/sshd_config

#>> UseDNS no

#>> PermitRootLogin yes #允许root登录

#>> PermitEmptyPasswords no #不允许空密码登录

#>> PasswordAuthentication yes # 设置是否使用口令验证

# 实现免密登录

# ssh-keygen -t rsa

# for i in k8s-node1 k8s-node2; do ssh-copy-id -i .ssh/id_rsa.pub $i; done

# ssh root@k8s-node1

# ssh root@k8s-node2

|

升级内核(3.10->5.4)(所有节点)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

|

# 通过rpm包管理系统升级

# 查看现有内核

# uname -r

#>> 3.10.0-1160.92.1.el7.x86_64

# cat /etc/centos-release 或者 cat /proc/version 查看系统版本及内核版本

#>> CentOS Linux release 7.9.2009 (Core)

# 下载新版内核的rpm包

# cd /root

# wget https://mirrors.aliyun.com/elrepo/kernel/el7/x86_64/RPMS/kernel-lt-5.4.250-1.el7.elrepo.x86_64.rpm

# wget https://mirrors.aliyun.com/elrepo/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.250-1.el7.elrepo.x86_64.rpm

# 分发rpm到其他node

# for i in k8s-node1 k8s-node2;do scp kernel-lt-5.4.250-1.el7.elrepo.x86_64.rpm kernel-lt-devel-5.4.250-1.el7.elrepo.x86_64.rpm $i:/root/;done

# 安装新的kernel的rpm

# cd /root && yum localinstall -y kernel-lt*

# 查看系统上所有内核

# awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

#>> 0 : CentOS Linux (5.4.250-1.el7.elrepo.x86_64) 7 (Core)

#>> 1 : CentOS Linux (3.10.0-1160.92.1.el7.x86_64) 7 (Core)

#>> 2 : CentOS Linux (3.10.0-1160.el7.x86_64) 7 (Core)

#>> 3 : CentOS Linux (0-rescue-6a9496cb258542439f69c61bbf8a903b) 7 (Core)

# 通过 grub2-set-default 0 命令或编辑 /etc/default/grub进行设置启动加载内核

# grub2-set-default 0

# vim /etc/default/grub

#>> GRUB_TIMEOUT=5

#>> GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)"

#>> GRUB_DEFAULT=0 ## 设置为 awk 查看的内核序号

#>> GRUB_DISABLE_SUBMENU=true

#>> GRUB_TERMINAL_OUTPUT="console"

#>> GRUB_CMDLINE_LINUX="crashkernel=auto spectre_v2=retpoline rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet"

#>> GRUB_DISABLE_RECOVERY="true"

# 生成grub配置文件并重启

# grub2-mkconfig -o /boot/grub2/grub.cfg

# reboot

# 查看系统现有默认内核版本

# grubby --default-kernel

#>> /boot/vmlinuz-5.4.250-1.el7.elrepo.x86_64

# 查看系统中全部内核rpm信息

# rpm -qa | grep kernel

#>> kernel-tools-3.10.0-1160.92.1.el7.x86_64

#>> kernel-tools-libs-3.10.0-1160.92.1.el7.x86_64

#>> kernel-lt-devel-5.4.250-1.el7.elrepo.x86_64

#>> kernel-3.10.0-1160.92.1.el7.x86_64

#>> kernel-3.10.0-1160.el7.x86_64

#>> kernel-lt-5.4.250-1.el7.elrepo.x86_64

# 删除旧内核的rpm

# yum remove kernel-tools-3.10.0-1160.92.1.el7.x86_64 kernel-tools-libs-3.10.0-1160.92.1.el7.x86_64 kernel-3.10.0-1160.92.1.el7.x86_64 kernel-3.10.0-1160.el7.x86_64

# 通过yum-utils工具计形清除旧的rpm信息

# package-cleanup --oldkernels

|

配置ipv(所有节点)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

# 详细了解ipvs请看:https://icloudnative.io/posts/ipvs-how-kubernetes-services-direct-traffic-to-pods/

# 安装ipvs

# yum install ipvsadm ipset sysstat conntrack libseccomp -y

# vim /etc/modules-load.d/ipvs.conf

# 加入以下内容

#>> ip_vs

#>> ip_vs_lc

#>> ip_vs_wlc

#>> ip_vs_rr

#>> ip_vs_wrr

#>> ip_vs_lblc

#>> ip_vs_lblcr

#>> ip_vs_dh

#>> ip_vs_sh

#>> ip_vs_fo

#>> ip_vs_nq

#>> ip_vs_sed

#>> ip_vs_ftp

#>> ip_vs_sh

#>> nf_conntrack

#>> ip_tables

#>> ip_set

#>> xt_set

#>> ipt_set

#>> ipt_rpfilter

#>> ipt_REJECT

#>> ipip

# ipv配置生效

# systemctl enable --now systemd-modules-load.service

|

适配k8s的内核参数(所有节点)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

# vim /etc/sysctl.d/k8s.conf

#>> net.ipv4.ip_forward = 1

#>> net.bridge.bridge-nf-call-iptables = 1

#>> net.bridge.bridge-nf-call-ip6tables = 1

#>> fs.may_detach_mounts = 1

#>> net.ipv4.conf.all.route_localnet = 1

#>> vm.overcommit_memory=1

#>> vm.panic_on_oom=0

#>> fs.inotify.max_user_watches=89100

#>> fs.file-max=52706963

#>> fs.nr_open=52706963

#>> net.netfilter.nf_conntrack_max=2310720

#>>

#>> net.ipv4.tcp_keepalive_time = 600

#>> net.ipv4.tcp_keepalive_probes = 3

#>> net.ipv4.tcp_keepalive_intvl =15

#>> net.ipv4.tcp_max_tw_buckets = 36000

#>> net.ipv4.tcp_tw_reuse = 1

#>> net.ipv4.tcp_max_orphans = 327680

#>> net.ipv4.tcp_orphan_retries = 3

#>> net.ipv4.tcp_syncookies = 1

#>> net.ipv4.tcp_max_syn_backlog = 16384

#>> net.ipv4.ip_conntrack_max = 65536

#>> net.ipv4.tcp_max_syn_backlog = 16384

#>> net.ipv4.tcp_timestamps = 0

#>> net.core.somaxconn = 16384

# 启用

# sysctl --system

# 重启

# reboot

# lsmod | grep --color=auto -e ip_vs -e nf_conntrack

|

- net.ipv4.ip_forward:允许系统在网络接口之间转发IP数据包

- net.bridge.bridge-nf-call-iptables:启用iptables处理桥接的网络流量

- net.bridge.bridge-nf-call-ip6tables:启用ip6tables处理桥接的网络流量

- fs.may_detach_mounts:允许在使用时卸载文件系统

- net.ipv4.conf.all.route_localnet:允许本地源IP地址的数据包路由

- vm.overcommit_memory:控制内存分配的过度提交行为

- vm.panic_on_oom:控制当发生内存不足条件时内核崩溃的行为

- fs.inotify.max_user_watches:指定每个用户的最大inotify监视数

- fs.file-max:指定最大打开文件数

- fs.nr_open:指定可打开的文件句柄的最大数

- net.netfilter.nf_conntrack_max:指定最大跟踪连接数

- net.ipv4.tcp_keepalive_time:指定TCP keepalive探测之间的间隔时间

- net.ipv4.tcp_keepalive_probes:指定可以发送的TCP keepalive探测的数量

- net.ipv4.tcp_keepalive_intvl:指定TCP keepalive探测之间的间隔时间

- net.ipv4.tcp_max_tw_buckets:指定timewait套接字的最大数量

- net.ipv4.tcp_tw_reuse:控制timewait套接字是否可以被重用

- net.ipv4.tcp_max_orphans:指定最大孤立套接字数量

- net.ipv4.tcp_orphan_retries:指定孤立套接字的重试次数

- net.ipv4.tcp_syncookies:启用TCP syncookies以防止SYN洪泛攻击

- net.ipv4.tcp_max_syn_backlog:指定侦听队列的最大大小

- net.ipv4.ip_conntrack_max:指定最大跟踪连接数

- net.ipv4.tcp_max_syn_backlog:指定侦听队列的最大大小

- net.ipv4.tcp_timestamps:启用TCP时间戳以进行性能优化

- net.core.somaxconn:指定侦听队列中的最大连接数

基础组件安装-docker(所有节点)

Containerd作为runtime–k8s-1.24以上最好使用Docker作为runtime

使用Docker作为Runtime, 安装docker-ce-20.10

1

2

|

# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# yum install -y docker-ce-20.10.* docker-ce-cli-20.10.*

|

新版kubelet建议使用systemd, Docker的native.cgroupdriver设置为systemd

并配置docker hub的镜像

1

2

3

4

5

6

7

8

9

10

|

# mkdir /etc/docker

# vim /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": [

"https://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn",

"https://registry.docker-cn.com"

]

}

|

所有节点启动docker并设置开机自启

1

|

# systemctl daemon-reload && systemctl enable --now docker

|

基础组件安装-k8s

所有节点启动docker并设置开机自启

1

2

3

4

5

6

7

8

|

# k8s-master节点

# 下载k8安装包

# wget https://dl.k8s.io/v1.22.0/kubernetes-server-linux-amd64.tar.gz

# 下载etcd安装包

# wget https://github.com/etcd-io/etcd/releases/download/v3.4.27/etcd-v3.4.27-linux-amd64.tar.gz

# 解压k8s到bin目录下进行安装

# tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

# tar -zxvf etcd-v3.4.27-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.4.27-linux-amd64/etcd{,ctl}

|

分发安装

1

2

3

4

5

6

|

# 高可用master分发安装包

# MasterNodes='k8s-master2 k8s-master3'

# for NODE in $MasterNodes; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; done

# 分发work节点

# for NODE in k8s-node1 k8s-node2;do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ;done

|

所有节点创建/opt/cni/bin目录

1

|

# mkdir -p /opt/cni/bin

|

生成证书

使用CFSSL工具生成证书, 只需要在k8s-master进行操作.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

# wget "https://pkg.cfssl.org/R1.2/cfssl_linux-amd64" -o /usr/local/bin/cfssl

# wget "https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64" -o /usr/local/bin/cfssljson

# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

# 如果下载失败, 请手动下载, 并放在指定地方并修改名称

# mv cfssl_linux-amd64 cfssl

# mv cfssljson_linux-amd64 cfssljson

# 检验是否安装成功

# cfssl version

#>> Version: 1.2.0

#>> Revision: dev

#>> Runtime: go1.6

|

ETCD证书–mster节点需要

1

|

# mkdir -p /etc/etcd/ssl

|

K8s证书–所有节点

1

|

# mkdir -p /etc/kubernetes/pki

|

- 参考

https://github.com/dotbalo/k8s-ha-install/tree/manual-installation-v1.22.x内容,

- 参考

https://www.cnblogs.com/machangwei-8/p/16840481.html#_label3内容

主要用于手动安装k8s环境需要的配置文件等

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

|

# mkdir -p /root/k8s-ha

# vim ca-config.json

#>> {

#>> "signing": {

#>> "default": {

#>> "expiry": "876000h"

#>> },

#>> "profiles": {

#>> "kubernetes": {

#>> "usages": [

#>> "signing",

#>> "key encipherment",

#>> "server auth",

#>> "client auth"

#>> ],

#>> "expiry": "876000h"

#>> }

#>> }

#>> }

#>> }

# vim /root/k8s-ha/etcd-ca-csr.json

#>> {

#>> "CN": "etcd",

#>> "key": {

#>> "algo": "rsa",

#>> "size": 2048

#>> },

#>> "names": [

#>> {

#>> "C": "CN",

#>> "ST": "Beijing",

#>> "L": "Beijing",

#>> "O": "etcd",

#>> "OU": "Etcd Security"

#>> }

#>> ],

#>> "ca": {

#>> "expiry": "876000h"

#>> }

#>> }

# vim /root/k8s-ha/etcd-csr.json

#>> {

#>> "CN": "etcd",

#>> "key": {

#>> "algo": "rsa",

#>> "size": 2048

#>> },

#>> "names": [

#>> {

#>> "C": "CN",

#>> "ST": "Beijing",

#>> "L": "Beijing",

#>> "O": "etcd",

#>> "OU": "Etcd Security"

#>> }

#>> ]

#>> }

# 生成etcd CA证书

# cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

#>> 2023/08/19 22:47:44 [INFO] generating a new CA key and certificate from CSR

#>> 2023/08/19 22:47:44 [INFO] generate received request

#>> 2023/08/19 22:47:44 [INFO] received CSR

#>> 2023/08/19 22:47:44 [INFO] generating key: rsa-2048

#>> 2023/08/19 22:47:44 [INFO] encoded CSR

#>> 2023/08/19 22:47:44 [INFO] signed certificate with serial number 602044406162881782857402889892933919790484871979

# ll /etc/etcd/ssl/

#>> -rw-r--r-- 1 root root 1005 Aug 19 22:47 etcd-ca.csr

#>> -rw------- 1 root root 1679 Aug 19 22:47 etcd-ca-key.pem

#>> -rw-r--r-- 1 root root 1367 Aug 19 22:47 etcd-ca.pem

# 生成etcd CA证书的key, -hostname loc_ip, master集群名称和对应IP addr, 使用,隔开

# cfssl gencert -ca=/etc/etcd/ssl/etcd-ca.pem -ca-key=/etc/etcd/ssl/etcd-ca-key.pem -config=ca-config.json -hostname=127.0.0.1,k8s-master,192.168.163.100 -profile=kubernetes etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

|

生成k8s证书

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

# vim /root/k8s-ha/ca-csr.json

#>> {

#>> "CN": "kubernetes",

#>> "key": {

#>> "algo": "rsa",

#>> "size": 2048

#>> },

#>> "names": [

#>> {

#>> "C": "CN",

#>> "ST": "Beijing",

#>> "L": "Beijing",

#>> "O": "Kubernetes",

#>> "OU": "Kubernetes-manual"

#>> }

#>> ],

#>> "ca": {

#>> "expiry": "876000h"

#>> }

#>> }

# cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

# 生成10.96.0.1是k8s service网段证书。如果不是高可用集群虚拟VIP地址:192.168.163.100更改为k8s-master1主机IP地址,

# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.96.0.1,192.168.163.100,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.163.100,192.168.163.101,192.168.163.102 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

|

1

2

3

4

|

# vim front-proxy-ca-csr.json

# vim front-proxy-client-csr.json

# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

# cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

# vim manager-csr.json

# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

# 使用Kubectl生成一个Controller Manager所用的kubeconfig

# Controller Manager需要和APIServer进行通信,此时可以使用kubeconfig配置链接APIServer的信息,比如需要用到的证书、APIServer的地址等

# 单节点Master时, --server指定为k8s-matser-ip:16443, 默认端口是6443

# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.163.100:16443 --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

#> Cluster "kubernetes" set.

# 为该kubeconfig文件设置一个上下文环境

# kubectl config set-context system:kube-controller-manager@kubernetes --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

#> Context "system:kube-controller-manager@kubernetes" created.

# 为该kubeconfig设置一个用户项

# kubectl config set-credentials system:kube-controller-manager --client-certificate=/etc/kubernetes/pki/controller-manager.pem --client-key=/etc/kubernetes/pki/controller-manager-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

#> User "system:kube-controller-manager" set.

# 使用system:kube-controller-manager@kubernetes环境作为默认环境

# kubectl config use-context system:kube-controller-manager@kubernetes --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

#> Switched to context "system:kube-controller-manager@kubernetes".

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

# vim scheduler-csr.json

# 生成证书

# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

# 生成scheduler的kubeconfig文件

# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.163.100:16443 --kubeconfig=/etc/kubernetes/scheduler.kubeconfig

#> Cluster "kubernetes" set.

#

# kubectl config set-credentials system:kube-scheduler --client-certificate=/etc/kubernetes/pki/scheduler.pem --client-key=/etc/kubernetes/pki/scheduler-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/scheduler.kubeconfig

#> User "system:kube-scheduler" set.

#

# kubectl config set-context system:kube-scheduler@kubernetes --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=/etc/kubernetes/scheduler.kubeconfig

#> Context "system:kube-scheduler@kubernetes" created.

#

# kubectl config use-context system:kube-scheduler@kubernetes --kubeconfig=/etc/kubernetes/scheduler.kubeconfig

#> Switched to context "system:kube-scheduler@kubernetes".

|

- 生成一个Kubectl连接API Server的kubeconfig

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

# vim admin-csr.json

# 生成管理员用户的证书文件

# cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

#

# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.163.100:16443 --kubeconfig=/etc/kubernetes/admin.kubeconfig

#> Cluster "kubernetes" set.

#

# kubectl config set-credentials kubernetes-admin --client-certificate=/etc/kubernetes/pki/admin.pem --client-key=/etc/kubernetes/pki/admin-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/admin.kubeconfig

#> User "kubernetes-admin" set.

#

# kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --kubeconfig=/etc/kubernetes/admin.kubeconfig

#> Context "kubernetes-admin@kubernetes" created.

#

# kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig

#> Switched to context "kubernetes-admin@kubernetes".

|

k8s-master生成私钥和公钥

1

2

3

|

# openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

# openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

#> writing RSA key

|

发送证书到其他节点-(由于是单节点所有就不需要了)

1

2

|

# 多master节点

# for NODE in k8s-master1 k8s-master03;do for FILE in $(ls /etc/kubernetes/pki | grep -v etcd);do scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};done; for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig;do scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE};done;fone

|

配置ETCD

1

|

# vim /etc/etcd/etcd.config.yml

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

name: 'k8s-master1'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.163.100:2380'

listen-client-urls: 'https://192.168.163.100:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.163.100:2380'

advertise-client-urls: 'https://192.168.163.100:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master1=https://192.168.163.100:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

|

k8s-master创建etcdservice并启动

1

|

# vim /usr/lib/systemd/system/etcd.service

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

|

创建k8s-master的etcd证书

1

2

3

4

5

6

7

8

9

|

# mkdir /etc/kubernetes/pki/etcd

# ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

# systemctl daemon-reload

# 开机便启动etcd

# systemctl enable --now etcd

# 测试

# etcdctl --endpoints="192.168.163.100:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint health

#>> 192.168.163.100:2379 is healthy: successfully committed proposal: took = 7.792763ms

|

k8s-master节点配置

1

2

3

4

5

|

# vim /usr/lib/systemd/system/kube-apiserver.service

## service 网段 10.96.0.0/16

## node 网段 192.168.163.0

## pod 网段 172.16.0.0/12

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=16443 \

--insecure-port=0 \

--advertise-address=192.168.163.100 \

--service-cluster-ip-range=10.96.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.163.100:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

|

–secure-port=16443 一定要前后一致, 否则kube-controller-manager和kube-scheduler都会报dial tcp 192.168.163.100:16443: connect: connection refused错误.

–insecure-port=0 默认为8080端口, The connection to the server localhost:8080 was refused - did you specify the right host or port?报这个错, 就是说明没有把master节点加入k8s集群需要以下操作:

1

2

|

# mkdir -p /root/.kube

# cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

|

启动ApiServer并查看ApiServer状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

# systemctl daemon-reload && systemctl enable --now kube-apiserver

# systemctl status kube-apiserver

# 如果修改了启动配置, 需要重新加载守护程序并重新启动服务

# systemctl daemon-reload && systemctl restart kube-apiserver

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2023-08-21 01:22:17 CST; 7s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 57006 (kube-apiserver)

Tasks: 7

Memory: 367.8M

CGroup: /system.slice/kube-apiserver.service

└─57006 /usr/local/bin/kube-apiserver --v=2 --logtostderr=true --allow-privileged=true --bind-address=0.0.0.0 --secure-port=6443 --insecure-p...

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: W0821 01:22:21.516083 57006 genericapiserver.go:455] Skipping API apps/v1beta2 because it ha...sources.

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: W0821 01:22:21.516137 57006 genericapiserver.go:455] Skipping API apps/v1beta1 because it ha...sources.

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: W0821 01:22:21.538251 57006 genericapiserver.go:455] Skipping API admissionregistration.k8s....sources.

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: I0821 01:22:21.563399 57006 plugins.go:158] Loaded 12 mutating admission controller(s) successfully ...

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: I0821 01:22:21.563445 57006 plugins.go:161] Loaded 11 validating admission controller(s) successfull...

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: I0821 01:22:21.576508 57006 store.go:1434] "Monitoring resource count at path" resource="api...ervices"

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: I0821 01:22:21.642371 57006 cacher.go:406] cacher (*apiregistration.APIService): initialized

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: W0821 01:22:21.684472 57006 genericapiserver.go:455] Skipping API apiregistration.k8s.io/v1b...sources.

Aug 21 01:22:21 k8s-master kube-apiserver[57006]: I0821 01:22:21.686377 57006 dynamic_serving_content.go:110] "Loaded a new cert/key pair" nam...key.pem"

Aug 21 01:22:24 k8s-master kube-apiserver[57006]: I0821 01:22:24.342243 57006 aggregator.go:109] Building initial OpenAPI spec

Hint: Some lines were ellipsized, use -l to show in full.

|

1

|

# vim /usr/lib/systemd/system/kube-controller-manager.service

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--cluster-cidr=172.16.0.0/12 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

|

启动并查看状态

1

2

3

|

#

# systemctl daemon-reload && systemctl enable --now kube-controller-manager

# systemctl status kube-controller-manager

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2023-08-21 01:28:31 CST; 7s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 59613 (kube-controller)

Tasks: 5

Memory: 129.3M

CGroup: /system.slice/kube-controller-manager.service

└─59613 /usr/local/bin/kube-controller-manager --v=2 --logtostderr=true --address=127.0.0.1 --root-ca-file=/etc/kubernetes/pki/ca.pem --clust...

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: I0821 01:28:33.582770 59613 controllermanager.go:186] Version: v1.22.0

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: I0821 01:28:33.584593 59613 tlsconfig.go:178] "Loaded client CA" index=0 certName="request-...

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: I0821 01:28:33.584804 59613 tlsconfig.go:200] "Loaded serving cert" certName="Generated sel...

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: I0821 01:28:33.585009 59613 named_certificates.go:53] "Loaded SNI cert" index=0 certName="s...

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: I0821 01:28:33.585126 59613 secure_serving.go:195] Serving securely on [::]:10257

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: I0821 01:28:33.585846 59613 leaderelection.go:248] attempting to acquire leader leas...ager...

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: I0821 01:28:33.586297 59613 tlsconfig.go:240] "Starting DynamicServingCertificateController"

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: I0821 01:28:33.586566 59613 dynamic_cafile_content.go:155] "Starting controller" nam...ca.pem"

Aug 21 01:28:33 k8s-master kube-controller-manager[59613]: E0821 01:28:33.587271 59613 leaderelection.go:330] error retrieving resource lock kube-syst...

Aug 21 01:28:35 k8s-master kube-controller-manager[59613]: E0821 01:28:35.635266 59613 leaderelection.go:330] error retrieving resource lock kube-syst...

Hint: Some lines were ellipsized, use -l to show in full.

|

1

|

# vim /usr/lib/systemd/system/kube-scheduler.service

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

|

1

2

3

|

# systemctl daemon-reload

# systemctl enable --now kube-scheduler

# systemctl status kube-scheduler

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

● kube-scheduler.service - Kubernetes Scheduler

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2023-08-21 03:32:05 CST; 1min 2s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 110089 (kube-scheduler)

Tasks: 7

Memory: 18.7M

CGroup: /system.slice/kube-scheduler.service

└─110089 /usr/local/bin/kube-scheduler --v=2 --logtostderr=true --address=127.0.0.1 --leader-elect=true --kubeconfig=/etc/kubernetes/schedule...

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: I0821 03:32:06.555051 110089 server.go:140] "Starting Kubernetes Scheduler version" version="v1.22.0"

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: W0821 03:32:06.556020 110089 authorization.go:47] Authorization is disabled

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: W0821 03:32:06.556033 110089 authentication.go:47] Authentication is disabled

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: I0821 03:32:06.556042 110089 deprecated_insecure_serving.go:51] Serving healthz insecurely o....1:10251

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: I0821 03:32:06.557216 110089 tlsconfig.go:200] "Loaded serving cert" certName="Generated self signed...

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: I0821 03:32:06.557365 110089 named_certificates.go:53] "Loaded SNI cert" index=0 certName="self-sign...

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: I0821 03:32:06.557416 110089 secure_serving.go:195] Serving securely on [::]:10259

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: I0821 03:32:06.557542 110089 tlsconfig.go:240] "Starting DynamicServingCertificateController"

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: I0821 03:32:06.659530 110089 leaderelection.go:248] attempting to acquire leader lease kube-...duler...

Aug 21 03:32:06 k8s-master kube-scheduler[110089]: I0821 03:32:06.674955 110089 leaderelection.go:258] successfully acquired lease kube-system/...cheduler

Hint: Some lines were ellipsized, use -l to show in full.

|

kube-controller-manager和kube-scheduler如果报错: 1. kube-apiserver有问题 2. 授权有问题

因为, 请参考下图:

TLS Bootstrapping配置

1

2

3

4

5

6

7

8

|

# 配置bootstarp权限

# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.163.100:16443 --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# kubectl config set-credentials tls-bootstrap-token-user --token=c8ad9d.2e4d610cf3e7426f --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes --user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

|

bootstrap.secret.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

|

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-c8ad9d

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: c8ad9d

token-secret: 2e4d610cf3e7426f

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

|

1

2

3

4

5

6

7

8

|

# kubectl create -f bootstrap.secret.yaml

secret/bootstrap-token-c8ad9d created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-certificate-rotation created

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

|

node节点配置

1

2

3

4

5

6

|

# cd /etc/kubernetes/

# for NODE in k8s-node1 k8s-node2; do

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done

|

1

2

|

# 所有节点

# mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/

|

vim /usr/lib/systemd/system/kubelet.service

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

|

Runtime为Docker,请使用如下Kubelet的配置

vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf

1

2

3

4

5

6

7

|

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' "

ExecStart=

ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

|

vim /etc/kubernetes/kubelet-conf.yml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

|

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

|

1

2

3

4

5

6

7

8

9

10

|

# systemctl daemon-reload && systemctl enable --now kubelet

# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master NotReady <none> 85s v1.22.0

k8s-node1 NotReady <none> 81s v1.22.0

k8s-node2 NotReady <none> 77s v1.22.0

# 所有节点查看系统日志是否有报错信息 (忽略CNI错误)

# tail -f /var/log/messages

|

签发证书–k8s-master节点操作, 只操作一次

1

2

3

4

5

6

7

8

9

10

11

|

# kubectl -n kube-system create serviceaccount kube-proxy

# kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy

# SECRET=$(kubectl -n kube-system get sa/kube-proxy --output=jsonpath='{.secrets[0].name}')

# JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET --output=jsonpath='{.data.token}' | base64 -d)

# PKI_DIR=/etc/kubernetes/pki

# K8S_DIR=/etc/kubernetes

# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.163.100:16443 --kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

# kubectl config set-credentials kubernetes --token=${JWT_TOKEN} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

# kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

|

分发kube-config

1

2

3

|

for NODE in k8s-node1 k8s-node2; do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

done

|

所有节点配置

vim /usr/lib/systemd/system/kube-proxy.service

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

|

vim /etc/kubernetes/kube-proxy.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 172.16.0.0/12

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

|

1

2

|

# systemctl daemon-reload && systemctl enable --now kube-proxy

# systemctl status kube-proxy

|

1

2

3

4

5

6

|

# 错误1 -- 检查授权, JWT-token重新申城, 注册kube-config, 分发, systemctl daemon-reload && systemctl restart kube-proxy

kube-proxy k8s.io/client-go/informers/factory.go:134: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:anonymous" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

# 错误2 https://github.com/kubernetes/kubernetes/issues/104394

# 安装calico后再看

Aug 21 11:17:58 k8s-master kube-proxy[39513]: E0821 11:17:58.679327 39513 event_broadcaster.go:253] Server rejected event '&v1.Event{TypeMeta:v1.TypeMeta{Kind:"", APIVersion:""}, ObjectMeta:v1.ObjectMeta{Name:"k8s-master.177d47b53b7c49ed", GenerateName:"", Namespace:"default", SelfLink:"", UID:"", ResourceVersion:"", Generation:0, CreationTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:0, loc:(*time.Location)(nil)}}, DeletionTimestamp:(*v1.Time)(nil), DeletionGracePeriodSeconds:(*int64)(nil), Labels:map[string]string(nil), Annotations:map[string]string(nil), OwnerReferences:[]v1.OwnerReference(nil), Finalizers:[]string(nil), ClusterName:"", ManagedFields:[]v1.ManagedFieldsEntry(nil)}, EventTime:v1.MicroTime{Time:time.Time{wall:0xc130d3b9a829c7b1, ext:70300376, loc:(*time.Location)(0x2d81340)}}, Series:(*v1.EventSeries)(nil), ReportingController:"kube-proxy", ReportingInstance:"kube-proxy-k8s-master", Action:"StartKubeProxy", Reason:"Starting", Regarding:v1.ObjectReference{Kind:"Node", Namespace:"", Name:"k8s-master", UID:"k8s-master", APIVersion:"", ResourceVersion:"", FieldPath:""}, Related:(*v1.ObjectReference)(nil), Note:"", Type:"Normal", DeprecatedSource:v1.EventSource{Component:"", Host:""}, DeprecatedFirstTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:0, loc:(*time.Location)(nil)}}, DeprecatedLastTimestamp:v1.Time{Time:time.Time{wall:0x0, ext:0, loc:(*time.Location)(nil)}}, DeprecatedCount:0}': 'Event "k8s-master.177d47b53b7c49ed" is invalid: involvedObject.namespace: Invalid value: "": does not match event.namespace' (will not retry!)

|

calico

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

# calico.yaml-->https://github.com/dotbalo/k8s-ha-install/blob/manual-installation-v1.22.x/calico/calico.yaml

# 修改网段

# sed -i "s#POD_CIDR#172.16.0.0/12#g" calico.yaml

# 查看

# sed -i "s#POD_CIDR#172.16.0.0/12#g" calico.yaml

# 启动

# kubectl apply -f calico.yaml

# 查看

# kubectl get po -n kube-system

calico-kube-controllers-66686fdb54-29v7n 0/1 ContainerCreating 0 112s

calico-node-65sqh 0/1 Running 0 112s

calico-node-6vl2x 0/1 Running 0 112s

calico-node-j6bx4 0/1 Running 0 112s

calico-typha-67c6dc57d6-85gnd 0/1 Running 0 112s

calico-typha-67c6dc57d6-fr9mr 0/1 Running 0 112s

calico-typha-67c6dc57d6-l24p9 0/1 Running 0 112s

|

参考

centos镜像